Electronic english version since 2022 |

The newspaper was founded in November 1957

| |

Science to practice

JINR experience

in the use of deep neural networks in agriculture

By the decision of the 137th session of the Scientific Council of JINR, the first prize in the section "Applied physics research" for 2024 was awarded to the paper of A.V.Uzhinsky, G.A.Ososkov, A.V.Nechaevsky "Deep learning techniques for meeting various challenges in agriculture".

In agriculture, artificial intelligence technologies are used to detect development issues and to identify plant diseases, to predict crop yields, to map, to optimize resource use and many other tasks.

The Joint Institute for Nuclear Research has profession-oriented specialists and an excellent resource base for implementing major research in the field of machine learning. Since 2017, MLIT has been carrying out various projects related to the use of deep learning techniques to meet urgent agricultural tasks.

In 2017, a group of MLIT researchers won a grant from the Russian Foundation for Basic Research to develop an integrated system for diagnosing plant diseases based on their images and text description. At that time, deep convolutional neural networks performed well in meeting various image classification tasks. It became possible due to the fact that networks have learned to extract from images the significant parameters required for efficient classification.

By 2017, the idea of using convolutional neural networks to diagnose plant diseases had already been implemented in various investigations. In most cases, the PlantVillage database was used to train the prototypes, containing 54,306 images of 14 cultures and 26 diseases. The first versions of the prototypes developed at MLIT also used this base. However, it turned out that such prototypes, when tested on real data, show a very poor result. The issue was that PlantVillage images were accumulated under controlled conditions: made in the same lighting, with a gray background, have a similar separation of the sheet and its location. As a result, the use of the PlantVillage base had to be abandoned. To train prototypes that could be used in real conditions, you had to accumulate your base. It required image cataloging tools, in addition, convenient interfaces for working with prototypes and tools for working with descriptions of diseases, recommendations for their treatment and user requests were required. In order to address these tasks, MLIT developed a multifunctional plant disease detection platform (PDDP) that later evolved into the DoctorP project.

At the initial stage in 2018, images were accumulated from open sources. The first version of the base had only 350 images for five classes of grape leaves. The minimum number of images per class was just over 30.

At that time, such well-known convolutional neural network architectures as ResNet and MobileNet had already appeared, trained on very large samples of images, thanks to which they showed better and better results. It resulted in the emergence of a learning transfer technique designed specifically for small training sample conditions, when the weights of the main part of a large core network such as ResNet that is responsible for extracting significant parameters are "frozen" and the weights of several output layers are "finished" on this small sample, due to which it is possible to significantly reduce training resources and to get a good result. If you use Learning Transfer, you need a database of images on which the network will be further trained.

However, the use of the transfer technique with the image base available at that time did not give the required accuracy. Similar difficulties have been described in various investigations using image bases accumulated in the field. After having studied the literature, we found a solution in the field of applying specialized approaches to training: few-shot-learning - training with several attempts. Just as a person identifies someone by comparing with those he already knows, in the few-shot-learning approach, instead of classification, a comparison of significant features (embeddings), previously obtained in the convolutional layers of the neural network is performed. An example of a few-shot-learning approach is Siamese networks. Their training is based on submitting images of the same or different classes to the network. In the case of ContrastiveLoss minimization, learning occurs on pairs of images. Both images are passed through the network to obtain their representations in a multidimensional property space. If the images are of the same class, the prototype weights change so that the resulting vectors are closer to each other in Euclidean space and if the classes are different, then the vectors should be further away. Using ContrastiveLoss allowed accuracy above 98%. This approach yielded better results also because the number of image pairs developed for prototype training increases quadratically, increasing the amount of data for training.

With a further increase in the number of images and classes of diseases in the database and the corresponding expansion of the cloud platform, the solutions used no longer showed such good accuracy. Various experiments were carried out in the field of selecting optimal data argumentation policies but they did not bring significant results. The next natural development of the Siamese approach was the transition to the three-term loss minimization function - Tripletloss. With this approach, two images of one class and one image of another class are input. The learning process is aimed at selecting weights in such a way that in multidimensional space, the vectors-representations of images of one class become closer and of different classes - as far as possible from each other. As a result, more than 97% accuracy was achieved in 25 classes of images.

Experiments to select the optimal loss minimization function were carried out throughout the investigations. Functions oriented to Siamese networks such as Contrastive, Triplet, Quadruplet were replaced by angular functions SphereFace, ArcFace, CosFace. The angular loss minimization functions also tend to spread the vector of representations of various classes further apart, yet do it not in Euclidean space but on the conditional surface of the pseudosphere. Experiments were also carried out in the field of selecting the optimal basic architecture. At various times, networks such as ResNet, MobileNet, EfficientNet, ViT, ConvNeXt were used both in their original form and with various modifications, for example, using various blocks with attention mechanisms.

In addition to working with algorithms and networks, experiments were carried out to expand the image base using neural network approaches. A number of experiments have been associated with the use of generative-adversarial networks (DCGAN, WGAN, WGAN-GP, AWGAN, StyleGAN) to develop new images or to transfer features of one class to another (CycleGAN). Nevertheless, the high computational complexity and quality of synthesized images seriously limited the use of such techniques. At the same time, the use of LoRA (Low-rank adaptation) diffusion prototypes such as Stable Diffusion and Kandinsky demonstrates very encouraging results.

In parallel with the techniques, the platform itself developed, in which a whole collection of prototypes is currently presented. In the first step of processing a user request, a general prototype for diseases and pests is applied without reference to species and cultures (68 classes). Further - a prototype that determines the type of plant (71 classes) and if there is a specialized prototype for this species (27 prototypes), then the user, in addition to the general one, will be given a private forecast. When the result is displayed, the two closest classes to the loaded image are shown. In most cases, all this allows one to correctly identify the disease and get recommendations for its treatment. To interact with the platform, mobile applications for iOS and Android, a Telegram bot and a software interface (API) were developed to access the platform's capabilities of third-party services. At the moment, the platform has processed more than two hundred and fifty thousand user requests.

In addition to the classification issue for agriculture, detection problems are of great interest. If in the first, you need to determine which disease is in the image, then in the second - the coordinates of the position of objects in the image and their classes.

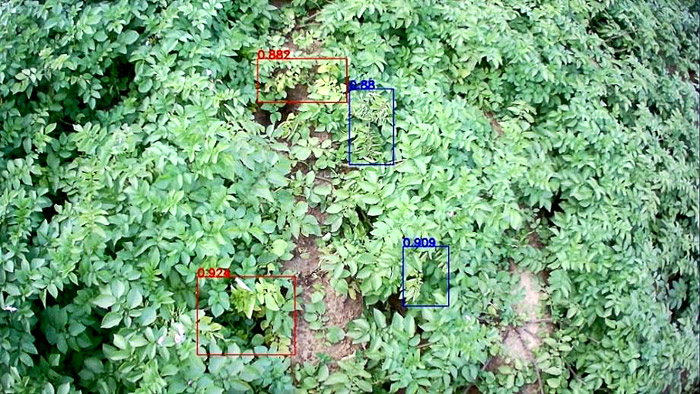

In a joint project of MLIT with "Doka - Gene Technologies" mechanisms for determining various potato diseases were developed. Research was carried out in several areas at once. To determine issues in the fields, hardware and software systems with HRCs installed on agricultural machinery were used. Detection and segmentation tasks are of the greatest interest. The first is to determine the areas of the image inside which the object of interest is located - a plant with signs of illness. The second is to select areas of objects and to determine all the pixels belonging to them.

Examples of images with detected symptoms of potato disease

As a result of research, not only the most promising neural network architectures and approaches to prototype training were identified, but also options for visualizing data on cases of detection in the fields were tested. Another research area is the analysis of hyperspectral images to search for patterns that allow one to identify diseased plants even before the appearance of visible symptoms. Investigations have confirmed the possibility of using various neural network and statistical algorithms to classify hyperspectral images of sick and healthy potatoes of various varieties. During the implementation of the project, approaches were worked out that allow training various prototypes and validating them, various options for pre-processing data were developed and tested and the most promising of them were identified, combinations of spectral channels were determined that allow obtaining a vegetation index that most clearly shows the signs of the disease.

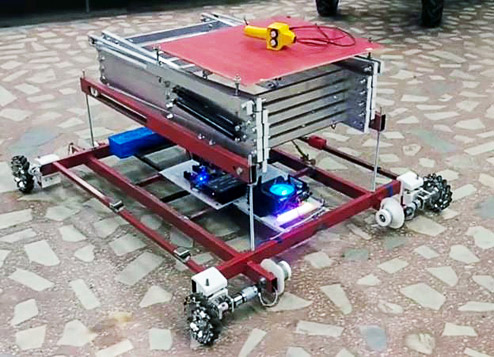

Another interesting research area is the automation of control and accounting in greenhouse complexes. The project is carried out jointly with the Engineering Centre of Dubna University. During the implementation of the project, an autonomous robotic platform is developed that can move on various types of surfaces, register the indicators of interest and take pictures at a height of up to four meters. The result of the work should be a digital twin of the greenhouse complex that will show up-to-date information on the number of plants in a row and their characteristics, challenges found, data on various indicators (temperature, humidity, illumination) and others. The project unites the solution of various technical tasks for the design and development of the platform's element base, robotic tasks - mapping, localization, autonomous movement, execution of route tasks, organizational tasks - image processing, displaying the result on a map and neural network tasks - tracking and counting, classification, localization and identification of diseases and pests.

On the left - the work of the prototype of counting plants in a row, on the right - a fully functional prototype of the complex

Currently, a completely functional prototype of a robotic platform, a prototype of a control and accounting complex and a number of prototypes for counting plants, estimating their condition, detecting pests and signs of various diseases have been developed.

The use of artificial intelligence and automation in agriculture can increase yields and reduce costs. On the basis of MLIT, major investigations are carried out that have not only scientific, but also practical significance. The result of the work includes both original techniques, algorithms and approaches, as well as software and hardware systems used in real conditions. Students of various universities, including Dubna University, are actively involved in working on projects.

Gennady OSOSKOV, Alexander UZHINSKY